IACP established “Building Community Trust” as a key priority going forward. Building community trust in regard to technology means recognizing that technology creates a possibility and probability for both intended and unintended consequences. In the exploration, comparison, and contrasting of public safety uses of artificial intelligence (AI), it is prudent to recognize the power of these tools and recognize there is no clear understanding of all possible ramifications of the many possible uses of AI. What works in one community might not be valuable in another.

AI is already in personal and business lives. Anyone who has a smart phone, uses a digital camera, drives a vehicle, gets packages or food delivered, or watches videos or podcasts on applications that can suggest the next video, is using or benefitting from AI. Currently planned uses of AI seem to expand daily. Self-driving vehicles, human body monitoring and healthy behavior predicting, and real-time financial investing transactions using algorithms are getting a lot of attention. The purpose of this article is an overview, not a deep dive into AI.

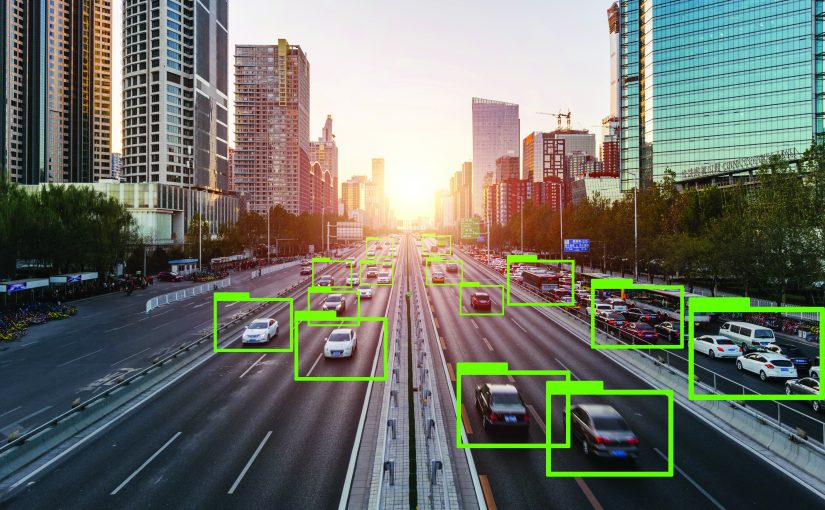

Defining the most common AI subcomponent elements here might be helpful. The most common AI consists of machine learning (ML). Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. ML algorithms are used in a wide variety of applications, such as medicine, email filtering, speech recognition, and computer vision. Deep learning (DL), detects or identifies layered structured patterns or nuances not typically recognized by human minds. To some, this will seem an oversimplification of these definitions; however, for the discussion here it’s best to keep it simple. DL methods have been applied to fields including speech recognition, natural language processing, and machine translation.